Ömer Erdinç Yağmurlu

I am Ömer Erdinç Yağmurlu, a master’s student in Computer Science at KIT, passionate about machine learning and robotics. Currently, I am a student researcher at the Intuitive Robots Lab, working on intuitive, embodied AI, imitation learning, real robot hardware, and 3D vision. Previously, I worked at TECO on the edge-ml.org project.

- Github •

- Google Scholar

Publications

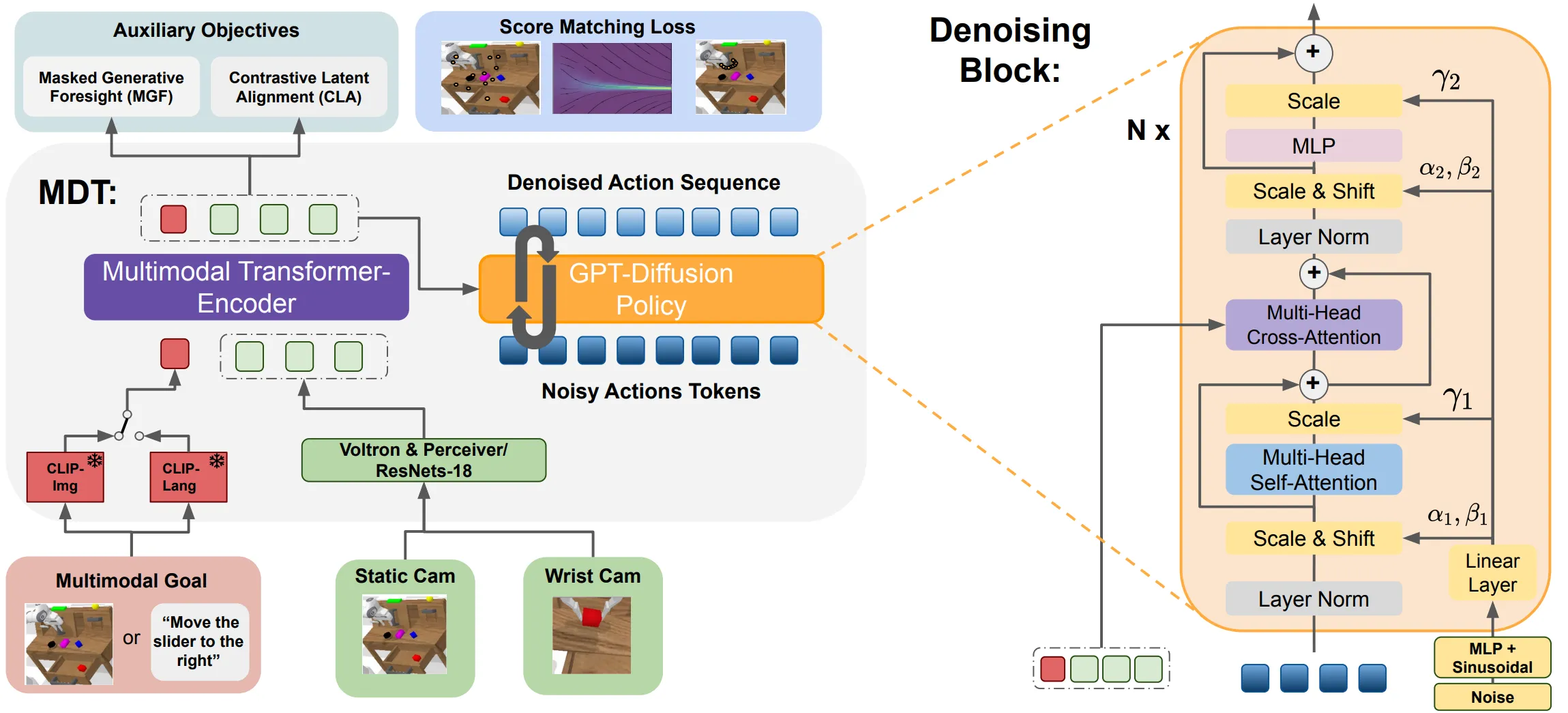

Multimodal Diffusion Transformer: Learning Versatile Behavior from Multimodal Goals

Robotics: Science and Systems (RSS), 2024

The Multimodal Diffusion Transformer (MDT) is a novel framework that learns versatile behaviors from multimodal goals with minimal language annotations. Leveraging a transformer backbone, MDT aligns image and language-based goal embeddings through two self-supervised objectives, enabling it to tackle long-horizon manipulation tasks. In benchmark tests like CALVIN and LIBERO, MDT outperforms prior methods by 15% while using fewer parameters. Its effectiveness is demonstrated in both simulated and real-world environments, highlighting its potential in settings with sparse language data.

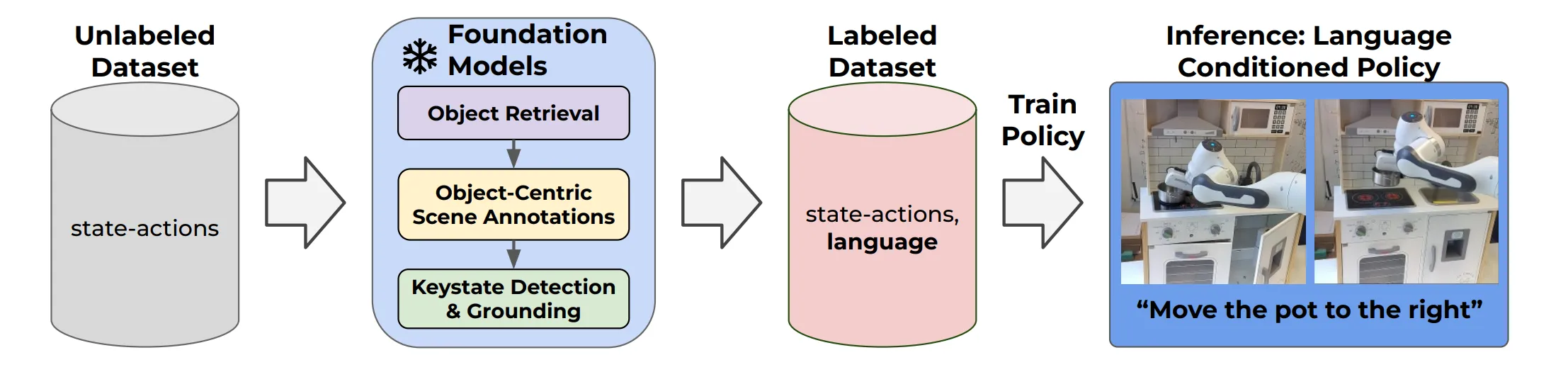

Scaling Robot Policy Learning via Zero-Shot Labeling with Foundation Models

Conference on Robot Learning (CoRL), 2024

Using pre-trained vision-language models, NILS detects objects, identifies changes, segments tasks, and annotates behavior datasets. Evaluations on the BridgeV2 and kitchen play datasets demonstrate its effectiveness in annotating diverse, unstructured robot demonstrations while addressing the limitations of traditional human labeling methods.

Projects

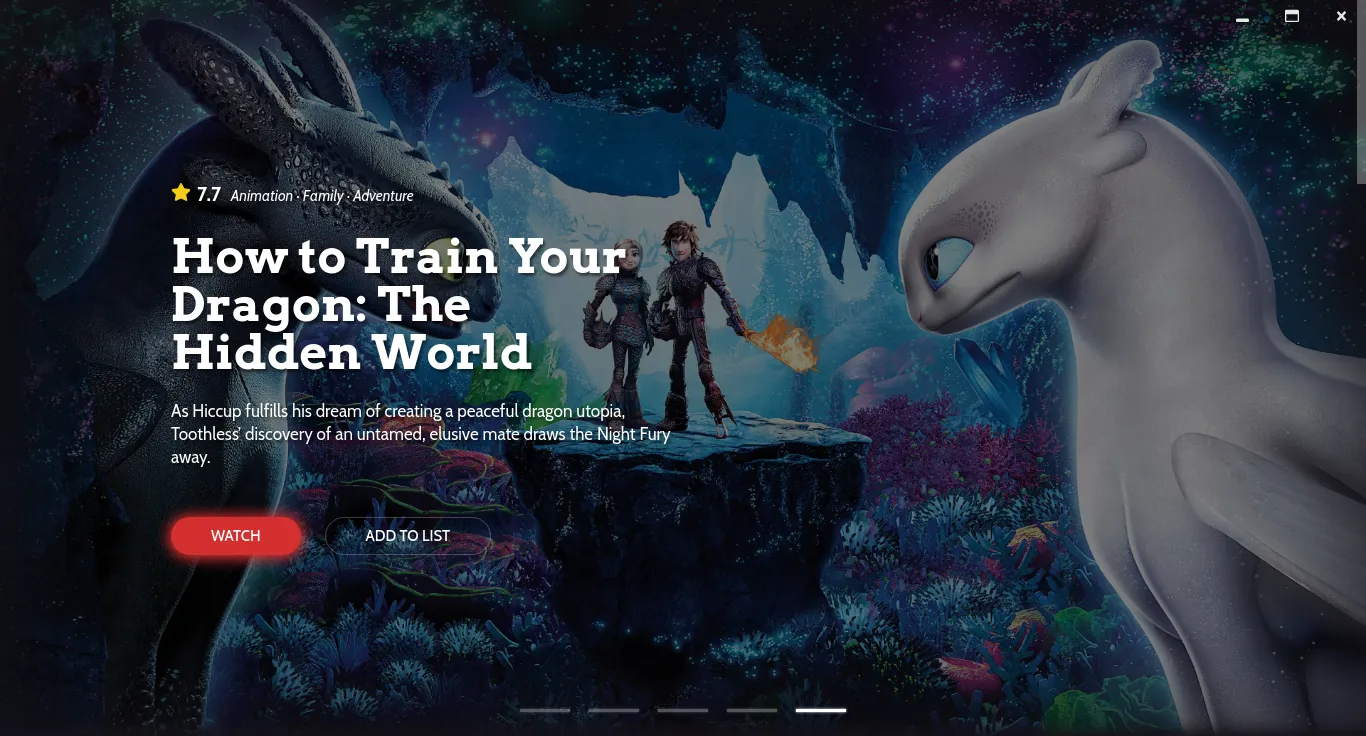

Popcorn

A jellyfin-like movie/series viewing platform that allows you to watch your local content on your own devices in an intuitive interface. I developed it during my first year of undergraduate CS studies, using Electron, JS, MongoDB and React.

A jellyfin-like movie/series viewing platform that allows you to watch your local content on your own devices in an intuitive interface. I developed it during my first year of undergraduate CS studies, using Electron, JS, MongoDB and React. AlgorivA

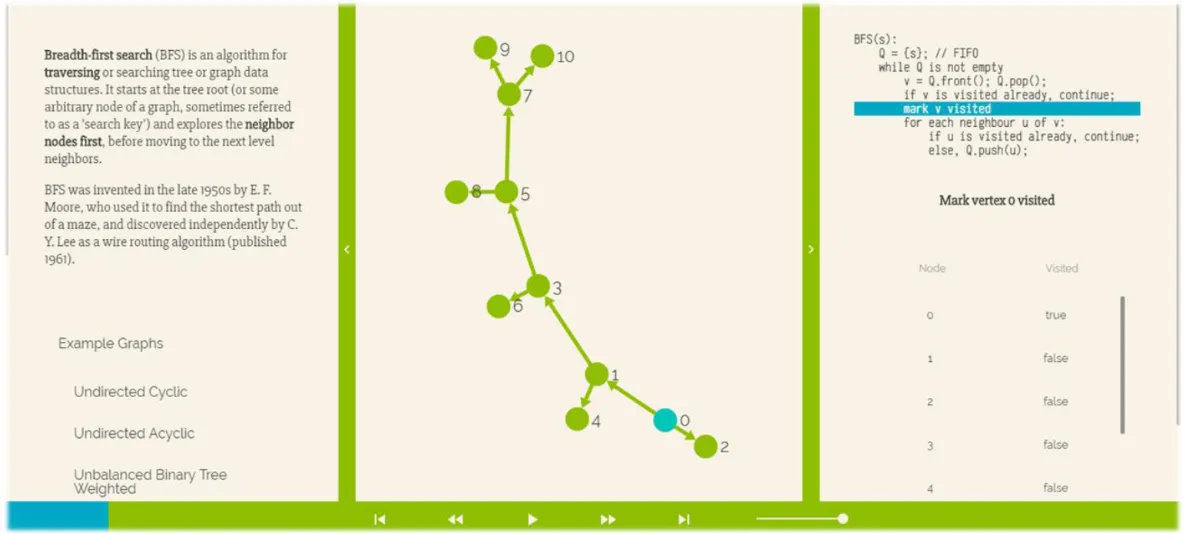

A modular algorithm visualization software. I developed this project during my second year in high school as part of the Technology Student Association Conference, Software Development Competition in 2016, where it ranked 8th place.

A modular algorithm visualization software. I developed this project during my second year in high school as part of the Technology Student Association Conference, Software Development Competition in 2016, where it ranked 8th place.  A javascript API to control Sphero Ollie over BLE. I developed this project during my first year in high school after purchasing a Sphero Ollie Controlled Robot. It was later (partly) integrated into the official Sphero API for Ollie.

A javascript API to control Sphero Ollie over BLE. I developed this project during my first year in high school after purchasing a Sphero Ollie Controlled Robot. It was later (partly) integrated into the official Sphero API for Ollie.